I’m affectionately calling this post Azure + Tableau Server = Flex for two reasons. First – are you a desktop user that has always wanted to extend your skills in Tableau as a platform? Or perhaps you’re someone who is just inherently curious and gains confidence by learning and doing (I fall into this camp). Well then this is the blog post for you.

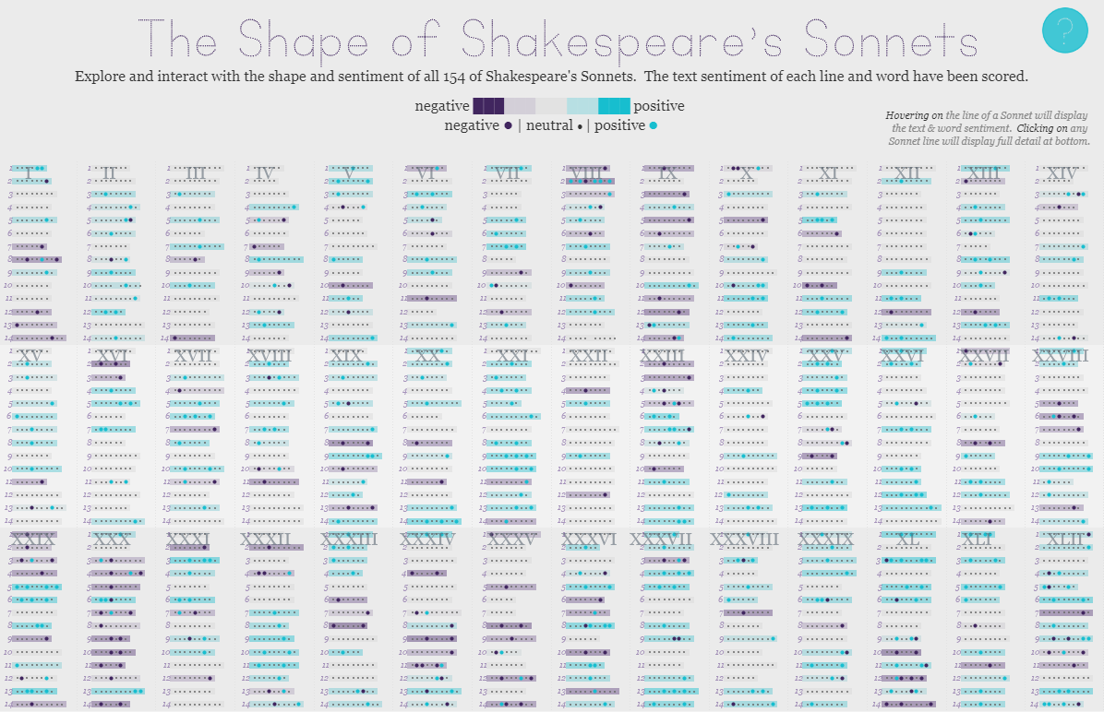

Let me back up a bit. I am very fortunate to spend a majority of my working time (and an amount of my free time!) advocating for visual analytics and developing data visualizations to support the value it brings. That means doing things like speaking with end users, developing requirements, partnering with application and database owners/administrators, identifying and documenting important metrics, and finally (admittedly one of the more enjoyable components) partnering with the end user on the build out and functionality of what they’re looking for. A very iterative process to get to results that have a fair amount of communication and problem solving sprinkled in to pure development time – a lucky job. The context here is this: as soon as you start enabling people to harness the power of data visualization and visual analytics the immediate next conversation becomes: how can I share this with the world (or ‘my organization’). Aha! We’ve just stepped into the world of Tableau Server.

Tableau Server or Tableau Online bring the capabilities to share the visualizations that you’re making with everyone around you. It does exactly what you want it to do: via a URL share interactive and data rich displays. Just the thought of it gets me misty-eyed. But, as with any excellent technology tool it comes with the responsibility of implementation, maintenance, security, cost, and ultimately a lot of planning. And this is where the desktop developer can hit a wall in taking things to that next level. When you’re working with IT folks or someone who may have done something like this in the past you’ll be hit with a question wall that runs the entire length of every potential ‘trap’ or ‘gotcha’ moment you’re likely to experience with a sharing platform. And more than that – you’re tasking with knowing the answers immediately. Just when you thought getting people to add terms like tooltip, boxplot, and dot plot was exciting they start using words like performance, permissions, and cluster.

So what do you do? You start reading through administration guides, beefing up your knowledge on the platform, and most likely extending your initial publisher perspective of Tableau Server to the world of sever administrator or site administrator. But along the way you may get this feeling – I certainly have – I know how to talk about it, but I’ve never touched it. This is all theoretical – I’ve built out an imaginary instance in my mind a million times, but I’ve never clicked the buttons. It’s the same as talking through the process of baking and decorating a wedding cake and actually doing it. And really if we thought about it: you’d be much more likely to trust someone who can say “yeah I’ve baked wedding cakes and served them” opposed to someone who says “I’ve read every article and recipe and how-to in the world on baking wedding cakes.”

Finally we’re getting to the point and this is where Azure comes into play. Instead of stopping your imaginary implementation process because you don’t have hardware or authority or money to test out an implementation and actually UNBOX the server – instead use Azure and finish it out. Build the box.

What is Azure? It’s Microsoft’s extremely deep and rich platform for a wide variety of services in the cloud. Why should you care? It gives you the ability to deploy a Tableau Server test environment through a website, oh, and they give you money to get started. Now I’ll say this right away: Azure isn’t the only one. There’s also Amazon’s AWS. I have accounts with both – I’ve used them both. They are both rich and deep. I don’t have a preference. For the sake of this post – Azure was attractive because you get free credits and it’s the tool I used for my last sandbox adventure.

So it’s really easy to get started with Azure. You can head over to their website and sign up for a trial. At the time of writing they were offering a 30-day free trial and $200 in credits. This combination is more than enough resources to be able to get started and building your box. (BTW: nobody has told me to say these things or offered me money for this – I am writing about this because of my own personal interest).

Now once you get started there are sort of 2 paths you can take. The first one would be to search the marketplace for Tableau Server. When you do that there’s literally step by step configuration settings to get to deployment. You start at the beginning with basic configuration settings and then get all the way to the end. It’s an easy path to get to the Server, but outside of the scope of where I’m taking this. Instead we’re going to take the less defined path.

Why not use the marketplace process? Well I think the less defined path offers the true experience of start to finish. Hardware sizing through to software installation and configuration. By building the machine from scratch (albeit it is a Virtual Machine) it would mimic the entire process more closely than using a wizard. You have fewer guard rails, more opportunity for exploration, and the responsibility of getting to the finish line correctly is completely within your hands.

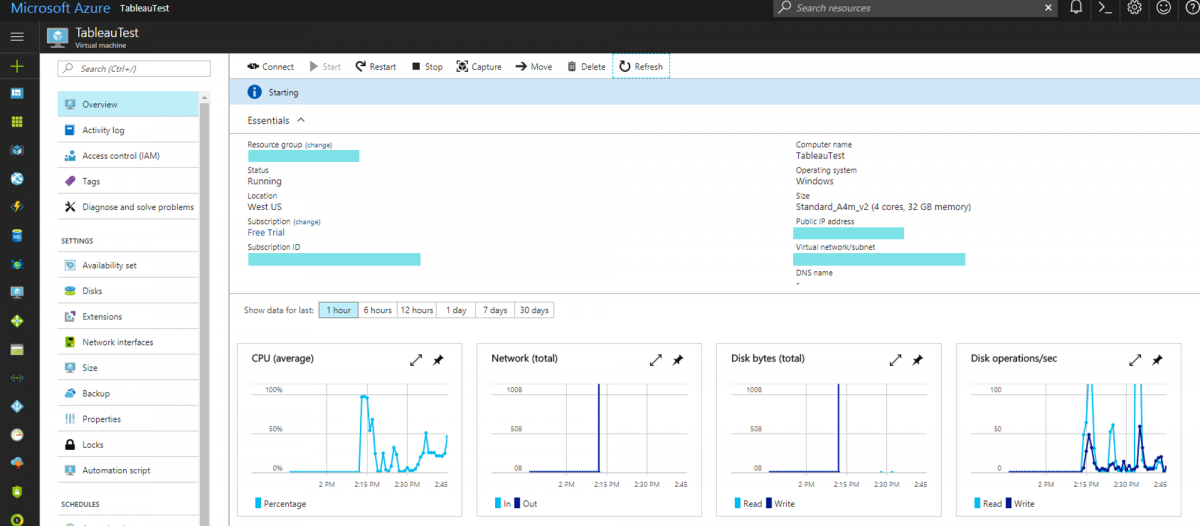

So here’s how I started: I made a new resource, a Windows Server 2012 R2 Datacenter box. To do that, you go through the marketplace again and choose that as a box type. It’s probably a near identical process to the marketplace Tableau Server setup. Make a box, size the box, add optional features, and go. To bring it closer to home go through the exercise of minimum requirements vs. recommended requirements from Tableau. For a single-node box you’ll need to figure out the number of CPUs (cores), the amount of RAM (memory), and the disk space you’ll want. When I did this originally I tried to start cheap. I looked through the billing costs of the different machines on Azure and started at the minimum. In retrospect I would say go with something heavier powered. You’ll always have the option to resize/re-class the hardware – but starting off with a decent amount of power will prevent slow install experience and degraded initial Server performance.

Once you develop the resource, you literally click a button to boot up the box and get started. It took probably 15 to 20 minutes for my box to initially be built. More than I was expecting.

Everything done up to this point it to get to a place where you have your own Tableau Server that you can do whatever you want with. You can set up the type of security, configure different components – essentially get down to the nitty gritty of what it would feel like to be a server administrator.

Your virtual machine should have access to the internet, so next steps are to go to here and download the software. Here’s a somewhat pro tip. Consider downloading a previous version of the server software so that you can upgrade and test out what that feels like. Consider the difference between major and minor releases and the nuance between what the upgrade process will be. For this adventure I started with 10.0.11 and ended up upgrading to 10.3.1.

The process of the actual install is on the level of “stupid easy.” But, you probably wouldn’t feel comfortable saying “stupid easy” unless you’ve actually done it. There are a few click through windows with clear instructions, but for the most part it installs start to finish without much input from the end user.

You get to this window here once you’ve finished the install process.

This is literally the next step and shows the depths to which you can administer the platform from within the server (from a menu/GUI perspective). Basic things can be tweaked and setup – the type of authentication, SMTP (email) for alerts and subscriptions, and the all important Run As User account. Reading through the Tableau Server: Everybody’s Install Guide is the best approach to get to this point. Especially because of something I alluded to earlier: the majority of this is really in the planning of implementation, not the unboxing or build.

Hopefully by this point the amount of confidence gained in going through this process is going to have you feeling invincible. You can take your superhero complex to the next level by doing the following tasks:

Start and Stop the Server via Tabadmin. This is a great exercise because you’re using the command line utility to interact with the Server. If you’re not someone who spends a lot of time doing these kinds of tasks it can feel weird. Going through the act of starting and stopping the server will make you feel much more confident. My personal experience was also interesting here: I like Tabadmin better than interacting with the basic utilities. You know exactly what’s going on with Tabadmin. Here’s the difference between the visual status indicator and what you get from Tabadmin.

When you right-click and ask for server status, it takes some time to display the status window. When you’re doing the same thing in Tabadmin, it’s easier to tell that the machine is ‘thinking.’

Go to the Status section and see what it looks like. Especially if you’re a power user from the front end (publisher, maybe even site administrator) – seeing the full details of what is in Tableau Server is exciting.

There are some good details in the Settings area as well. This is where you can add another site if you want.

Once you’ve gotten this far in the process – the future is yours. You can start to publish workbooks and tinker with settings. The possibilities are really limitless and you will be working toward understanding and feeling what it means to go through each step. And of course the best part of it all: if you ruin the box, just destroy it and start over! You’ve officially detached yourself from the chains of responsibility and are freely developing in a sandbox. It is your chance to get comfortable and do whatever you want.

I’d even encourage you to interact with the API. See what you can do with your site. Even if you use some assisted API process (think Alteryx Output to Tableau Server tool) – you’ll find yourself getting much more savvy at speaking Server and that much closer to owning a deployment in a professional environment.