Last week at Tableau’s customer conference (TC18) in New Orleans I had the pleasure of speaking in three different sessions, all extremely hands on in Tableau Desktop. Two of the sessions were focused exclusively on tips and tricks (to make you smarter and faster), so I wanted to take the time to slow down and share with you the how of my favorite mapping tip. And that tip just so happens to be: how to create dynamic coloring based on quantiles for maps.

First, a refresher on what quantiles are. Quantiles are points that you can make within a data distribution to evenly cut it into equal intervals. The most popular of the quantiles is the quartile, which partitions out data into 0 to 25%, 25 to 50%, 50 to 75%, and 75 to 100%. We see quartiles all the time with boxplots and it’s something we’re quite comfortable with. The reason the quantile is valuable is that it lines up all the measurements from smallest to largest and buckets them into groups – so when you use something like color, it no longer represents the actual value of a measurement, but instead the bucket (quantile) that the measurement falls into. These are particularly useful when measurements are either widely dispersed or very tightly packed.

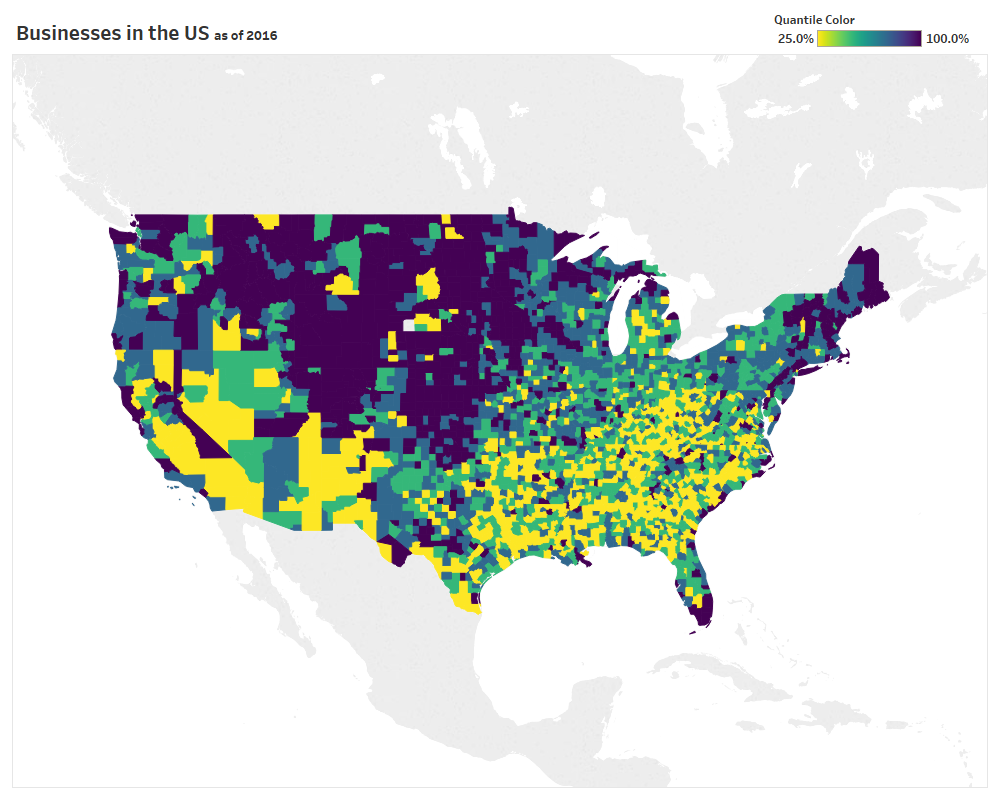

Here’s my starting point example – this is a map showing the number of businesses per US county circa 2016.

The range of number of businesses per county is quite large, going from 1 all the way to about 270k. And since there is such a wide variety in my data set, it’s hard to understand more nuanced trends or truly answer the question “which areas in the US have more businesses?”

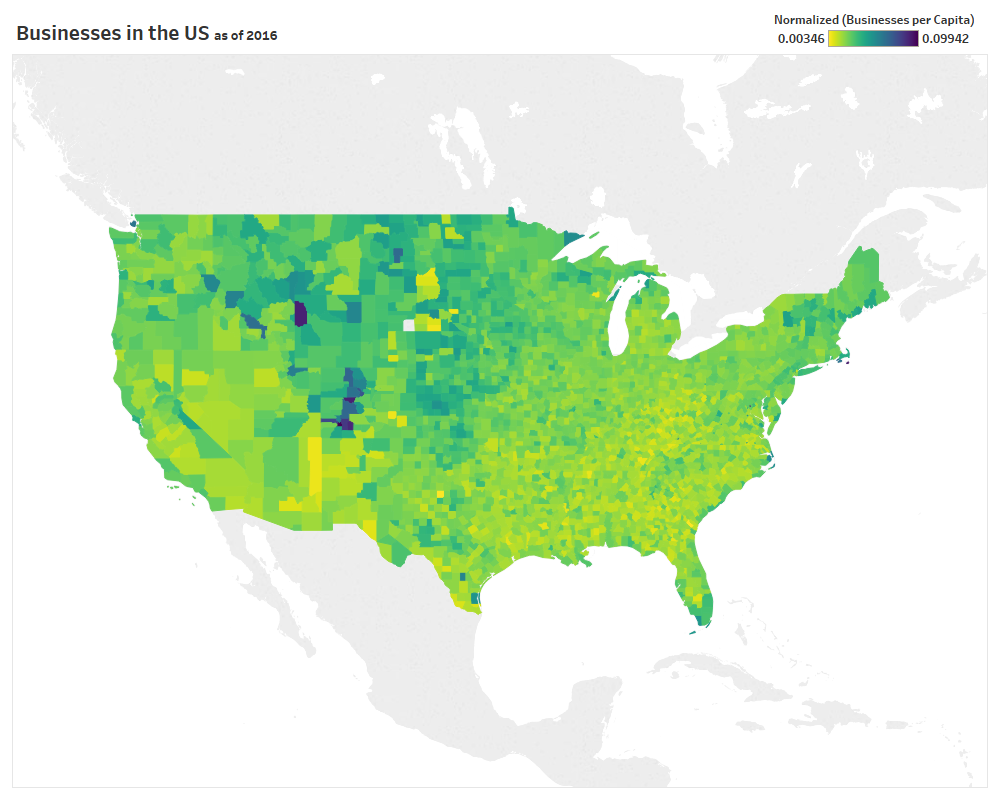

A good first step would be to normalize by the population to create a per capita measurement. Here’s the updated visualization – notice that while it’s improved, now I’m running into a new issue – all my color is concentrated around the middle.

The trend or data story has changed, my eyes are now drawn toward the dark blue in Colorado and Wyoming, but I am still having a hard time drawing distinctions and giving direction on my question of “which areas in the US have the most businesses?”

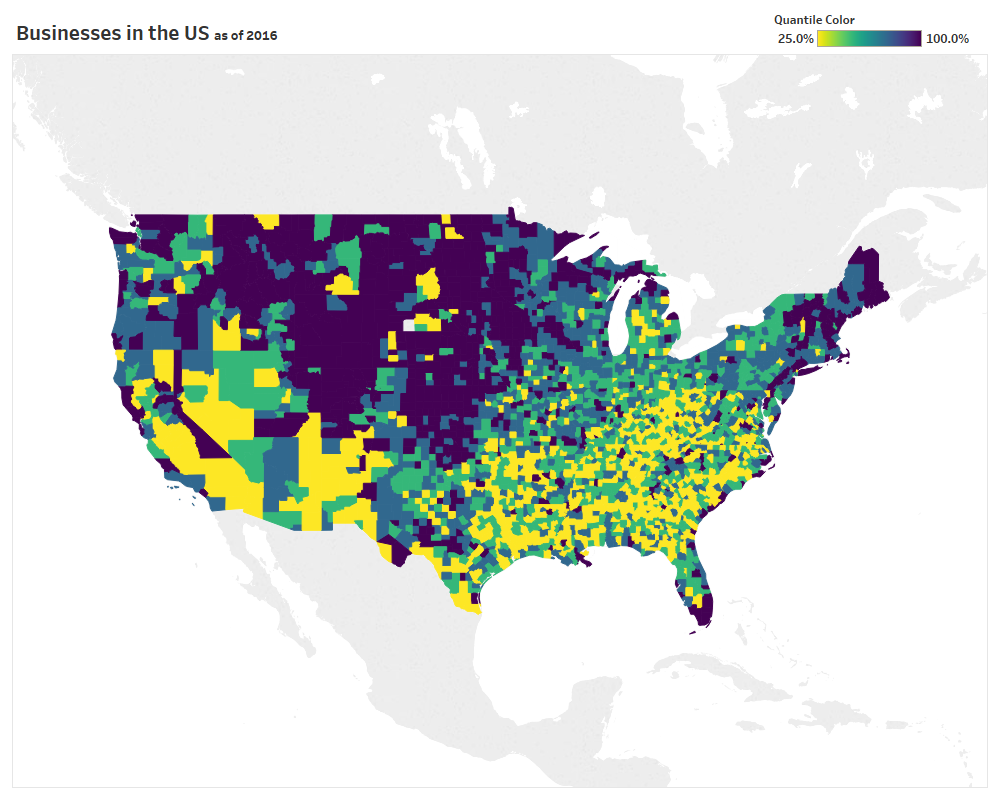

So as a final step I can adjust my measurements to percentiles and bucket them into quantiles. Here’s the same normalized data set now turned into quartiles.

I now have 4 distinct color buckets and a much richer data display to answer my question. Furthermore I can make the legend dynamic (leading back to the title of this blog post) by using a parameter. The process to make the quantiles dynamic involves 3 steps:

- Turn your original metric (the normalized per capita in my example) into a percentile by creating a “Percentile” Quick Table Calculation. Save the percentile calculation for later use.

2. Determine what quantiles you will allow (I chose between 4 and 10). Create an integer parameter that matches your specification.

2. Determine what quantiles you will allow (I chose between 4 and 10). Create an integer parameter that matches your specification.

3. Create a calculated field that will bucket your data into the desired quantile based on the parameter.

3. Create a calculated field that will bucket your data into the desired quantile based on the parameter.

You’ll notice that the Quantile Color calculation depends on the number of quantiles in your parameter and will need to be adjusted if you go above 10.

Now you have all the pieces in place to make your dynamic quantile color legend. Here’s a quick animation showing the progression from quartiles to deciles.

The next time you have data where you’re using color to represent a measure (particularly on a map) and you’re not finding much value in the visual, consider creating static or dynamic quantiles. You’ll be able to unearth hidden insights and help segment your data to make it easier to focus on the interesting parts.

And if you’re interested in downloading the workbook you can find it here on my Tableau Public.